Available on: Open Source EditionEnterprise EditionCloud1.0.0

Build and modify flows directly from natural language prompts.

The AI Copilot can generate and iteratively edit declarative flow code with AI-assisted suggestions.

Overview

The AI Copilot is designed to help build and modify flows directly from natural language prompts. Describe what you are trying to build, and Copilot will generate the YAML flow code for you to accept or adjust. Once your initial flow is created, you can iteratively refine it with Copilot’s help, adding new tasks or adjusting triggers without touching unrelated parts of the flow. Everything stays as code and in Kestra's usual declarative syntax.

Configuration

To add Copilot to your flow editor, add the following to your Kestra configuration:

kestra:

ai:

type: gemini

gemini:

model-name: gemini-2.5-flash

api-key: YOUR_GEMINI_API_KEY

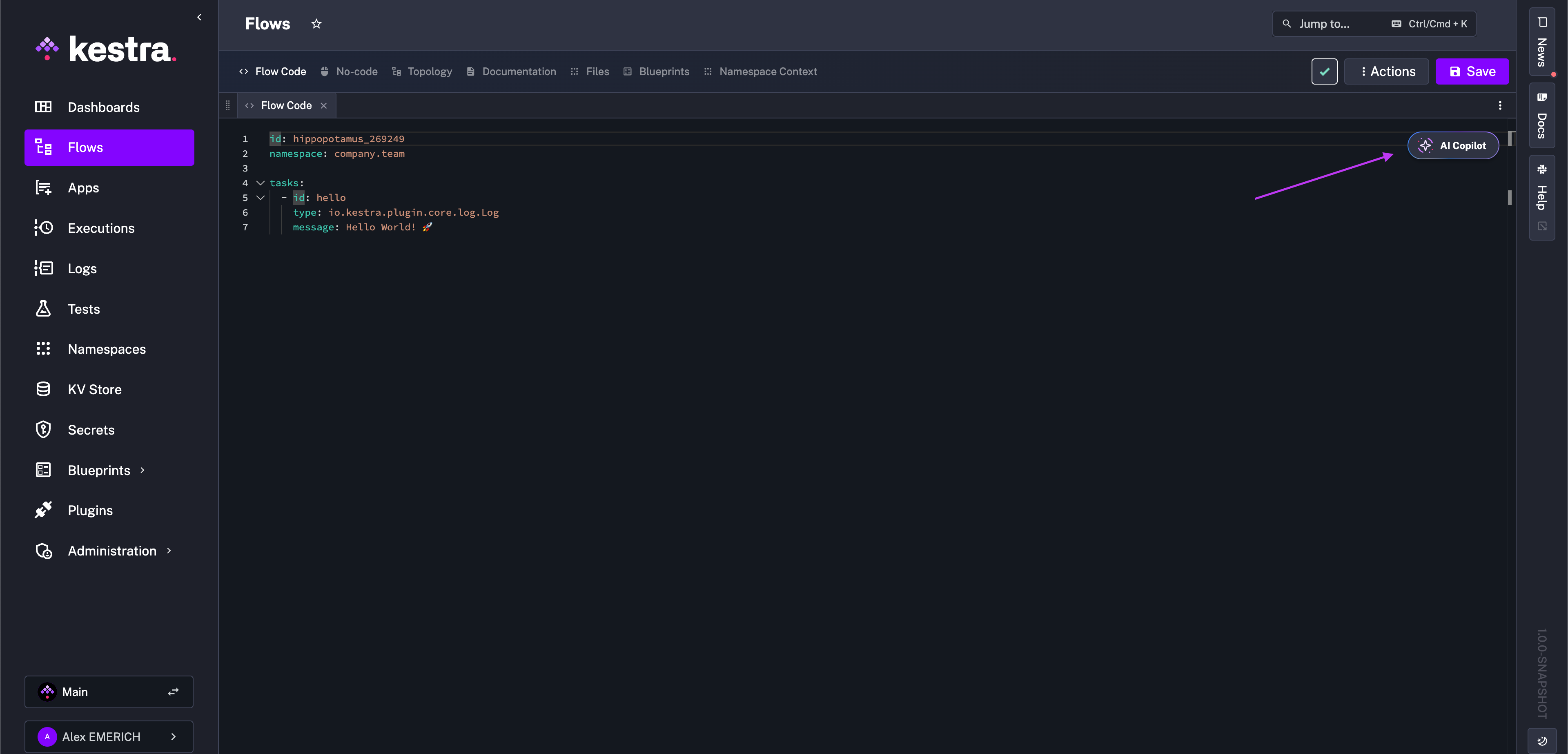

Replace api-key with your Google Gemini API key, and Copilot will appear in the top right corner of the flow editor. Optionally, you can add the following properties to your configuration:

temperature: Controls randomness in responses — lower values make outputs more focused and deterministic, while higher values increase creativity and variability.topP(nucleus sampling): Ranges from 0.0–1.0; lower values (0.1–0.3) produce safer, more focused responses for technical tasks, while higher values (0.7–0.9) encourage more creative and varied outputs.topK: Typically ranges from 1–200+ depending on the API; lower values restrict choices to a few predictable tokens, while higher values allow more options and greater variety in responses.maxOutputTokens: Sets the maximum number of tokens the model can generate, capping the response length.logRequests: Creates logs in Kestra for LLM requests.logResponses: Creates logs in Kestra for LLM responses.baseURL: Specifies the endpoint address where the LLM API is hosted.

The open-source version supports only Google Gemini models. Enterprise Edition users can configure any LLM provider, including Amazon Bedrock, Anthropic, Azure OpenAI, DeepSeek, Google Gemini, Google Vertex AI, Mistral, and all open-source models supported by Ollama. Navigate down to the Enterprise configurations section for your provider. If you use a different provider, please reach out to us and we'll add it.

Build flows with Copilot

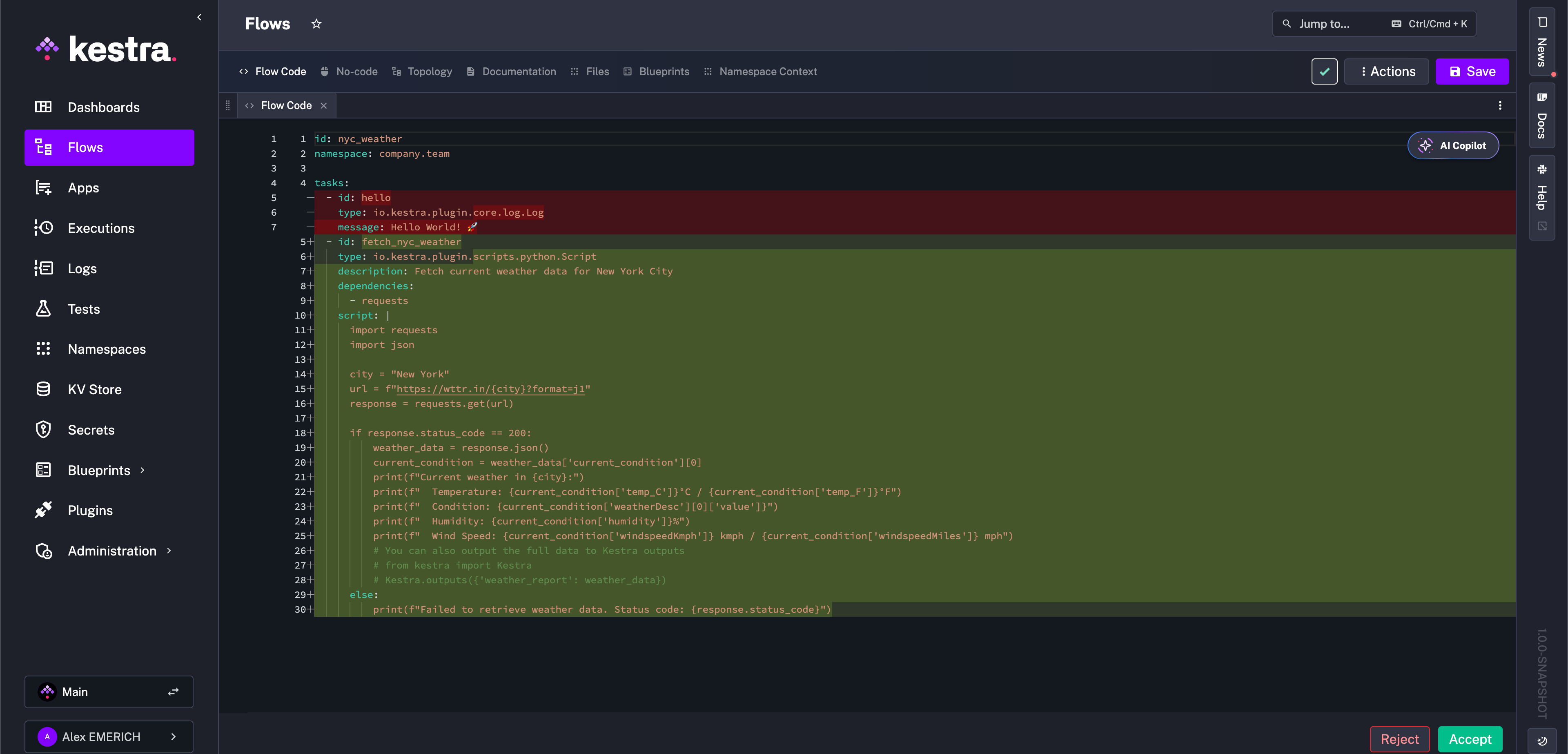

In the above demo, we want to create a flow that uses a Python script to fetch New York City weather data. To get started, open the Copilot and write a prompt. For example:

Create a flow with a Python script that fetches weather data for New York City

Once prompted, the Copilot generates YAML directly in the flow editor that can be accepted or refused in the bottom right corner.

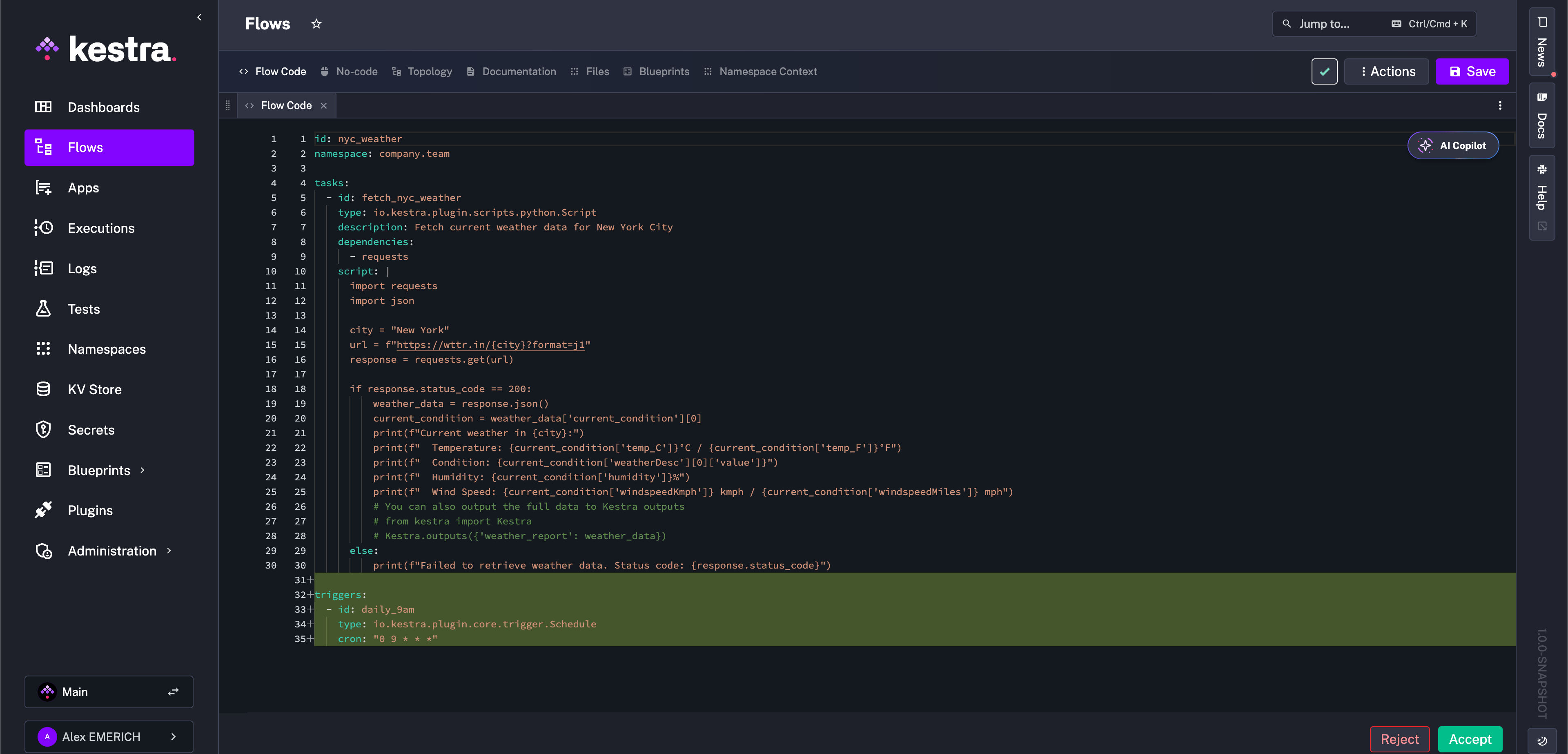

If accepted, the flow is created and can be saved for execution, iterated on manually, or continually iterated upon by the Copilot. For example, you want a trigger added to the flow to run it on a schedule. Reopen the Copilot and prompt it with the desired trigger setup such as:

Add a trigger to run the flow every day at 9 AM

The Copilot again makes a suggestion to add to the flow, but only in the targeted section, in this case a triggers block. This is also the case if you want the Copilot only to consider a specific task, input, plugin default, and so on.

You can continuously collaborate with Copilot until the flow is exactly as you imagined. If accepted, suggestions are always declaratively written and manageable as code. You can keep track of the revision history using the built-in Revisions tab or with the help of Git Sync.

Starter prompts

To get started with Copilot, here are some example prompts to test, iterate on, and use as a starting point for collaboratively building flows with AI in Kestra:

Enterprise Edition Copilot configurations

Enterprise Edition users can configure any LLM provider, including Amazon Bedrock, Anthropic, Azure OpenAI, DeepSeek, Google Gemini, Google Vertex AI, Mistral, OpenAI, and all open-source models supported by Ollama. Each configuration has slight differences, so make sure to adjust for your provider.

Amazon Bedrock

kestra:

ai:

type: bedrock

bedrock:

model-name: amazon.nova-lite-v1:0

access-key-id: BEDROCK_ACCESS_KEY_ID

secret-access-key: BEDROCK_SECRET_ACCESS_KEY

Anthropic

kestra:

ai:

type: anthropic

anthropic:

model-name: claude-opus-4-1-20250805

api-key: CLAUDE_API_KEY

Azure OpenAI

kestra:

ai:

type: azure-openai

azure-openai:

model-name: gpt-4o-2024-11-20

api-key: AZURE_OPENAI_API_KEY

tenant-id: AZURE_TENANT_ID

client-id: AZURE_CLIENT_ID

client-secret: AZURE_CLIENT_SECRET

endpoint: "https://your-resource.openai.azure.com/"

Deepseek

kestra:

ai:

type: deepseek

deepseek:

model-name: deepseek-chat

api-key: DEEPSEEK_API_KEY

base-url: "https://api.deepseek.com/v1"

Google Gemini

kestra:

ai:

type: gemini

gemini:

model-name: gemini-2.5-flash

api-key: YOUR_GEMINI_API_KEY

Google Vertex AI

kestra:

ai:

type: googlevertexai

googlevertexai:

model-name: gemini-2.5-flash

project: GOOGLE_PROJECT_ID

location: GOOGLE_CLOUD_REGION

endpoint: VERTEX-AI-ENDPOINT

Mistral

kestra:

ai:

type: mistralai

mistralai:

model-name: mistral:7b

api-key: MISTRALAI_API_KEY

base-url: "https://api.mistral.ai/v1"

Ollama

kestra:

ai:

type: ollama

ollama:

model-name: llama3

base-url: http://localhost:11434

OpenAI

kestra:

ai:

type: openai

openai:

model-name: gpt-5-nano

api-key: OPENAI_API_KEY

base-url: https://api.openai.com/v1

OpenRouter

kestra:

ai:

type: openrouter

openrouter:

api-key: OPENROUTER_API_KEY

base-url: "https://openrouter.ai/api/v1"

model-name: x-ai/grok-beta

Was this page helpful?